October 07, 2025

October 07, 2025From Millions to Billions

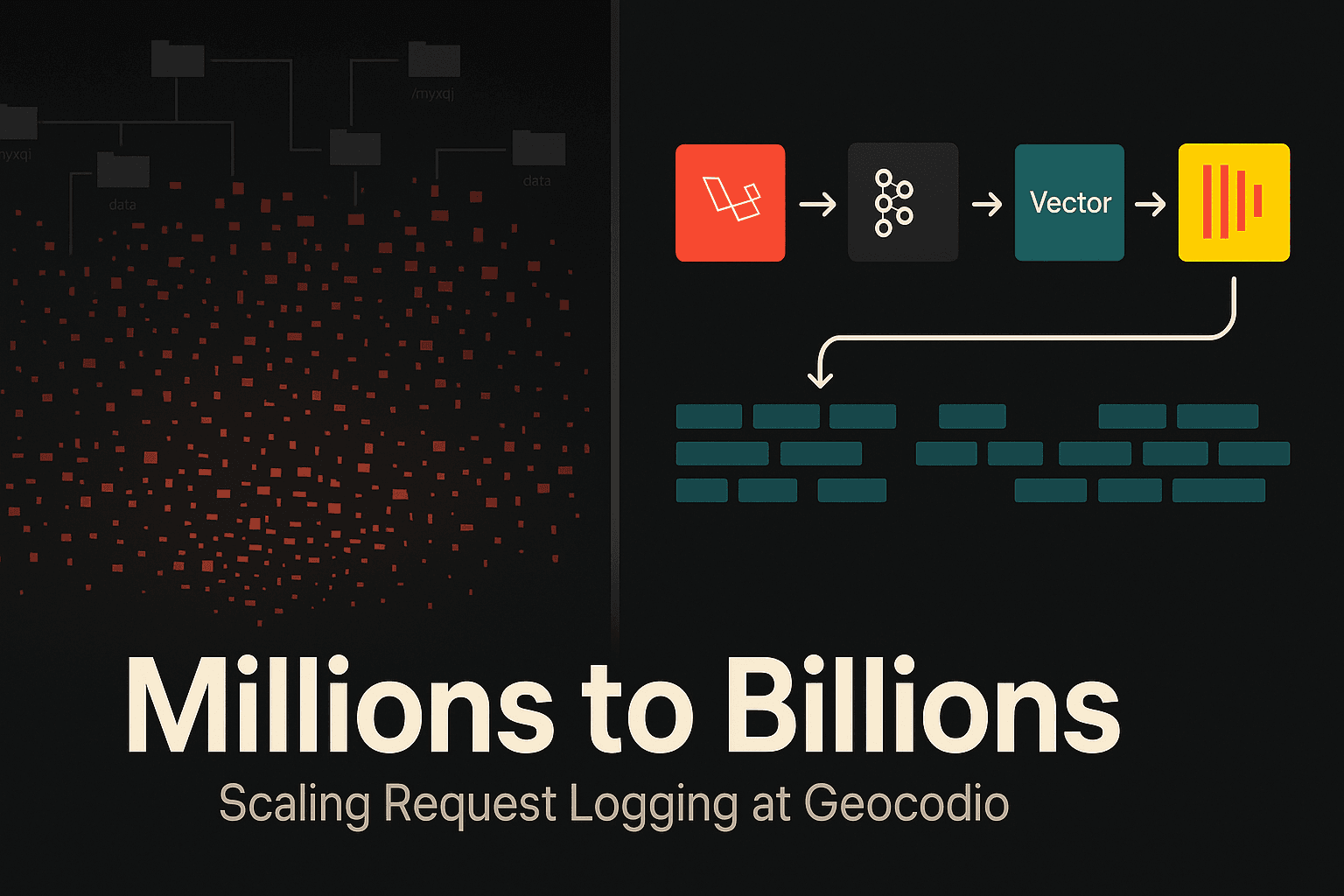

How we solved request logging at scale by moving from MariaDB to ClickHouse, Kafka, and Vector after our deprecated database engine couldn't keep up with billions of monthly requests.

This article is based on a talk that I gave at PHP[tek] in 2025.

Scaling Request Logging from Millions to Billions with ClickHouse, Kafka, and Vector

Why Track All These Requests?

Geocodio offers a pay-as-you-go metered plan where users get 2,500 free geocoding lookups per day. This means we need to:

- Track the 2,500 free tier requests

- Continue tracking above that threshold for billing

- Let users view their usage in real-time on their dashboard

- Give admins the ability to query this data for support and debugging

- Store request details so we can replay customer requests when debugging issues

This isn't just nice-to-have data. It's tied directly to our billing and customer support workflows.

The Original Architecture

Our initial setup was pretty straightforward:

We were using MariaDB with the TokuDB storage engine, which was specifically designed for high-performance insert operations with incredibly good compression—often 5 to 10 times better than InnoDB. When you're dealing with billions of records, storage efficiency matters.

Here's what our request tracking table looked like:

CREATE TABLE `requests_2020_05` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`user_id` int(11) NOT NULL,

`access_id` int(11) NOT NULL,

`query` text,

`count` int(11) DEFAULT 1,

`fields` varchar(255) DEFAULT NULL,

`fields_count` int(11) DEFAULT 0,

`accuracy_score` decimal(5,4) DEFAULT NULL,

`accuracy_type` varchar(50) DEFAULT NULL,

`http_status` int(11) DEFAULT 200,

`response_time_ms` int(11) DEFAULT NULL,

`queue_time_ms` int(11) DEFAULT NULL,

`user_agent` text,

`source_ip_address` varchar(45) DEFAULT NULL,

`request_type` varchar(20) DEFAULT NULL,

`hostname` varchar(100) DEFAULT NULL,

`created_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

`deleted` tinyint(1) DEFAULT 0,

PRIMARY KEY (`id`),

KEY `user_id` (`user_id`),

KEY `access_id` (`access_id`),

KEY `created_at` (`created_at`)

) ENGINE=TokuDB DEFAULT CHARSET=utf8mb4;

Notice that table name—requests_2020_05. We don't keep one massive table. Instead, we partition by year and month, making it easy to roll over month after month and drop old data. We also maintain an archive table where we roll up stats we need to carry forward.

How Request Tracking Works in Laravel

In our Laravel application, we register a singleton RequestTracker class in the IoC container:

public function register(): void

{

$this->app->singleton(RequestTracker::class);

}

Using a singleton means we get the same instance of RequestTracker every time we resolve it, no matter where we are in our application. This lets us collect request data throughout the request lifecycle:

// Early in the request

resolve(RequestTracker::class)

->setApiKey($request->get('api_key'))

->setQuery($request->all())

->setCount($addressCount)

->setType('geocode')

->setFields($fields);

// Later, after processing

resolve(RequestTracker::class)

->setHttpStatus(200)

->setAccuracyScore($result->accuracy)

->fillFromRequest($request)

->track();

The magic happens in terminable middleware. After we've sent the response to the user, we persist the tracking data:

public function trackStoredParameters(): void

{

try {

$requestsRepository = new RequestsRepository;

$user = null;

if (! empty($this->parametersToStore['user_id'])) {

$user = User::find($this->parametersToStore['user_id']);

}

$requestsRepository->insert($user, $this->parametersToStore, false);

} catch (PDOException $e) {

info('Could not track request: '.$e->getMessage());

app('sentry')->captureException($e);

}

$this->parametersToStore = null;

}

This approach gives us error handling (users still get their data if tracking fails) and performance benefits (we don't make users wait for us to log their request).

The Problems Started Piling Up

This setup worked for years, but we started hitting some serious issues:

TokuDB is deprecated. It's been deprecated since 2021. No more maintenance, no updates, and we can't upgrade our database version. That's a problem.

Performance degradation at scale. Over the course of a month, the database grows from nothing to billions of records. For our highest-volume customers, who might make tens of millions of requests per day, queries got slower and slower. By the end of the month, some users couldn't even load their usage page—the queries would just time out.

Cache stampede risk. We had a Redis cache cluster in front of the database, but earlier this year we lost cache keys. We recovered quickly because the cache was still populated, but if we ever had to repopulate from the database at the end of the month? Those queries would be incredibly intensive, and we'd have some serious downtime.

When your request logging is tied to billing, downtime isn't an option.

Attempt #1: Just Swap MariaDB for ClickHouse

My first thought was pretty simple: keep the architecture the same, just swap out MariaDB for ClickHouse.

I'd heard about ClickHouse from a talk Jess Archer gave at Laracon a couple years ago. She talked about using it to power Laravel Nightwatch, their new analytics platform. If the Laravel team is using it to track request data, it seemed like the perfect fit for us too.

Plus, learning something radically different sounded like a lot of fun.

Understanding ClickHouse

ClickHouse is fundamentally different from MariaDB. Here's the thing—MariaDB is row-oriented, which is how we typically think about databases. But ClickHouse is column-oriented, which is a totally different way of thinking about and querying data.

Row-Oriented (MariaDB):

| id | user_id | count | response_time_ms | created_at |

|----|---------|-------|------------------|------------|

| 1 | 100 | 5 | 42 | 2025-01-15 |

| 2 | 101 | 3 | 38 | 2025-01-15 |

Data is stored together by row. Great for transactional operations.

Column-Oriented (ClickHouse):

id: [1, 2, 3, ...]

user_id: [100, 101, 102, ...]

count: [5, 3, 8, ...]

response_time_ms: [42, 38, 51, ...]

created_at: [2025-01-15, 2025-01-15, ...]

Data is stored together by column. Super efficient for analytics and aggregations across large datasets.

The other big differences:

- MariaDB is optimized for transactions, high concurrency, small fast row-level operations, strong consistency, and current operational data that changes.

- ClickHouse is optimized for analytic queries, scary good compression, batch processing, fast aggregates across huge datasets, and historical data that doesn't change.

Perfect. We're not updating these request records once they're written. It's static historical data.

How ClickHouse Stores Data

ClickHouse uses this concept called "parts." Each insert operation creates immutable data part folders on the file system:

/var/lib/clickhouse/data/default/requests/

├── 20250115_1_1_0/

│ ├── user_id.bin

│ ├── count.bin

│ ├── response_time_ms.bin

│ └── ...

├── 20250115_2_2_0/

└── 20250115_3_3_0/

These parts get merged in the background by merge tree engines. ClickHouse automatically combines smaller parts into bigger parts on a schedule, following a tiered strategy based on part size.

I updated the code to check for a ClickHouse feature flag and insert into ClickHouse while also continuing to insert into MariaDB:

protected function publish(array $parameters): bool

{

if (config('geocodio.kafka.enabled', false)) {

$this->publishToKafka($parameters);

}

return $this->publishToDatabase($parameters);

}

I deployed it slowly. One server at a time through our public cluster. Everything looked fine. I started rolling it out to our private cluster, where our highest-volume customers live on dedicated servers...

And Slack started blowing up.

The TOO_MANY_PARTS Error

TOO_MANY_PARTS

That's the error I got. Over and over. Because we were inserting on every single request—those small row-level inserts—we were creating parts faster than the merge process could handle. We were completely overwhelming the system.

I went to the ClickHouse docs (which are fantastic, by the way), and they had a whole page dedicated to this error. Somewhere on that page, they mentioned buffer tables.

Attempt #2: Buffer Tables

Buffer tables are a special table engine in ClickHouse that accumulates data in memory and then automatically flushes to your target table based on time or size thresholds. It's designed specifically to optimize small frequent inserts.

I created the buffer table and made my best guess at the configuration:

CREATE TABLE requests_buffer AS requests

ENGINE = Buffer(

default, -- database

requests, -- target table

16, -- number of layers

10, -- min time (seconds)

100, -- max time (seconds)

10000, -- min rows

1000000, -- max rows

10000000, -- min bytes

100000000 -- max bytes

);

I went through the deployment again. Public cluster went fine. Got past the point where we failed last time in the private cluster. Kept going. Finished the deployment. Checked Sentry at the end of the day—everything was quiet.

I went to bed feeling pretty good.

I woke up the next morning to Sentry absolutely screaming at me.

The TOO_MANY_LINKS Error

All night long, a new error:

TOO_MANY_LINKS

We'd run out of Linux file system links. I SSH'd into the server and tried to list the storage directory. I couldn't. The command just hung. I'd never seen that before.

I went to Claude and asked for help. Eventually we figured out how to at least count the directories:

find /var/lib/clickhouse -type d | wc -l

35 million directories and part files.

The system couldn't even create another file. I thought the buffer table would fix it, but we'd just compounded the issue. I was stumped. I couldn't even navigate the server anymore.

I had to bail out. It was time to ask for help.

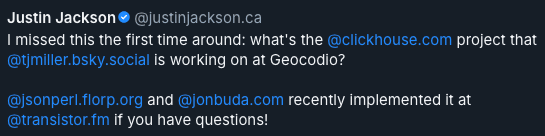

Enter Justin Jackson and Jon Buda

I have a podcast called Slightly Caffeinated with my friend Chris Gmyr. It's mostly personal updates and whatever tech we're dealing with. I'd posted an episode talking about this ClickHouse implementation struggle.

Justin Jackson from Transistor.fm (where we host the podcast) reached out on Bluesky:

Justin, I have so many questions.

He pointed me to Jon Buda, and Jon mentioned something super interesting: the folks at Honeybadger were also using ClickHouse for their new Insights analytics platform.

I have a relationship with the Honeybadger team—I rewrote their PHP and Laravel integrations years ago. So I hit up Josh and Ben. Two days later, I was on a Zoom call explaining our setup.

Ben got this big smile on his face.

"Man, you gotta batch up your inserts."

They proceeded to shared their ingestion platform architecture with me. They were so confident in their approach that I really had no choice but to grab onto it and run with it.

The Solution: Kafka + Vector + ClickHouse

They told me to introduce Kafka and Vector into the mix. I really wanted to avoid adding more infrastructure, but I was at the point when I was willing to try anything to make this work.

What is Kafka?

Kafka is an event streaming platform. It's distributed pub/sub messaging designed for high throughput and fault tolerance. It stores streams of records in topics (categories), and it enables real-time data processing pipelines.

Here's the visual:

Producers write events to Kafka, Kafka stores them in a distributed log, and consumers read those events out.

Why did we need this?

- Durable storage

- Handles super high throughput

- Decouples producers from consumers

- Scales horizontally

We were dealing with a scale problem going from millions to billions. Kafka can easily scale with us.

Building a Kafka Publisher

There's an awesome Laravel package for working with Kafka, but I ran into some weird connection issues. So I went back to Claude and we decided to write our own publisher using the RdKafka PHP extension:

namespace Shared;

use Exception;

use RdKafka\Conf;

use RdKafka\Producer;

use RdKafka\ProducerTopic;

class KafkaPublisher

{

protected ?Producer $producer = null;

protected ?string $brokers = null;

protected array $topicInstances = [];

protected function createProducer(): Producer

{

$conf = new Conf;

$conf->set('bootstrap.servers', $this->brokers);

// Reliability settings

$conf->set('message.send.max.retries', config('geocodio.kafka.retries', 3));

$conf->set('retry.backoff.ms', config('geocodio.kafka.retry_backoff_ms', 200));

$conf->set('socket.timeout.ms', config('geocodio.kafka.socket_timeout_ms', 1000));

$conf->set('message.timeout.ms', config('geocodio.kafka.message_timeout_ms', 5000));

$conf->set('queue.buffering.max.messages', config('geocodio.kafka.max_buffered_messages', 100000));

$conf->set('queue.buffering.max.ms', config('geocodio.kafka.buffer_flush_ms', 100));

$conf->set('compression.codec', config('geocodio.kafka.compression', 'snappy'));

return new Producer($conf);

}

public function publish(string $topic, array $data, ?string $key = null): bool

{

$this->initializeProducer();

$topicInstance = $this->getTopic($topic);

$message = $this->encodeMessage($data, $topic);

$topicInstance->produce(RD_KAFKA_PARTITION_UA, 0, $message, $key);

$this->producer->poll(0);

$this->flush(1000);

return true;

}

}

Pretty straightforward. The cool thing is we can configure retries, backoff, compression, and buffering all through config values.

Then we updated our repository to publish to Kafka:

protected function publishToKafka(array $parameters): void

{

try {

resolve(KafkaPublisher::class)

->publish(

self::KAFKA_TOPIC,

$this->formatRequestData($parameters)

);

} catch (Throwable $e) {

info('Could not publish request to Kafka: '.$e->getMessage());

app('sentry')->captureException($e);

}

}

We're still publishing to MariaDB for now, but this lets us run both systems in parallel and audit the data at the end of the month.

What is Vector?

Vector is a high-performance observability data pipeline. It's lightweight, ultra-fast, written in Rust, and maintained by Datadog. It supports 100+ different inputs and outputs.

Why did we need Vector?

- Efficient way to get data from Kafka to ClickHouse

- Consumes messages from Kafka

- Batches them into optimal sizes

- Handles retries and back pressure

- Optional transformation layer for schema changes

The guys at Honeybadger told me you really want to be hitting at minimum 30,000 to 50,000 records being batch inserted at once. That's a much bigger scale than we were ever thinking about with those individual row-level inserts.

Configuring Vector

Here's our Vector configuration:

sources:

kafka_requests:

type: kafka

bootstrap_servers: kafka:9092

topics:

- geocodio_requests

group_id: vector_consumer

auto_offset_reset: earliest

transforms:

prepare_logs:

type: remap

inputs:

- kafka_requests

source: |

. = parse_json!(.message)

sinks:

clickhouse:

type: clickhouse

endpoint: http://clickhouse:8123

inputs:

- prepare_logs

database: default

table: requests

skip_unknown_fields: true

auth:

strategy: basic

user: geocodio_usr

password: LocalDevelopmentPassword

batch:

timeout_secs: 5

buffer:

max_events: 120000

request:

retry_attempts: 2

retry_max_duration_secs: 10

healthcheck:

enabled: true

We're doing time-based inserts—every 5 seconds, Vector grabs all the events that happened over those 5 seconds and does one big batch insert. Instead of creating tons of tiny parts, we're creating fewer but much bigger parts. ClickHouse handles that totally fine.

Moving to ClickHouse Cloud

Initially, we wanted to self-host everything. That's kind of our philosophy at Geocodio. But when I looked at the operational requirements, reality set in:

Self-Hosted ClickHouse:

- 3 ClickHouse nodes for replication

- 3 ZooKeeper nodes for coordination

- 3 Kafka brokers for high availability

- Vector instances

That's a lot of infrastructure for a small team that also needs to write features and fix bugs.

ClickHouse Cloud wasn't as expensive as I expected. And the benefits were huge:

- Operational simplicity

- Scaling on demand

- Built-in high availability and redundancy

- Automatic updates (ClickHouse has a weekly release cadence)

- Expert support when we need it

The Honeybadger guys mentioned that ClickHouse releases bug fixes and features constantly. "You really need to architect this so you can update everything quickly and frequently." Updating a database while actively inserting data sounds scary. Having ClickHouse handle that for us was a massive win.

The Migration Strategy

From day one, I thought about how to do this with zero downtime. The approach:

- Dual-write everything. Insert into both Kafka (→ ClickHouse) and MariaDB simultaneously for a full month.

- Feature flags. Be able to turn Kafka on or off independently from database writes.

- Audit the data. At the end of the month, compare what's in ClickHouse versus MariaDB.

- Gradual cutover. Once we're confident, start cutting reads over to ClickHouse (also behind feature flags).

Since this is tied to billing, we can't afford to get it wrong. This strategy gives us validation at every step.

Here's the code that makes it possible:

protected function publish(array $parameters): bool

{

if (config('geocodio.kafka.enabled', false)) {

$this->publishToKafka($parameters);

}

return $this->publishToDatabase($parameters);

}

Simple, but effective. We can flip Kafka on or off without touching the database writes.

Key Takeaways

Batch inserts are critical for ClickHouse. You can't treat it like a transactional database with row-level inserts. You need to batch aggressively—30k-50k records minimum.

Don't be afraid to ask for help. Talking to Jon Buda and the Honeybadger team saved me weeks of trial and error. Sometimes the best solution is learning from people who've already solved the problem.

Feature flags for the win. Being able to turn Kafka and ClickHouse on or off independently gave us confidence to deploy incrementally and validate every step.

Column-oriented databases are a different beast. If you're doing analytics or tracking large volumes of append-only data, ClickHouse is incredibly powerful. But you have to think differently about how data is stored and queried.

Big Thanks

Big thanks to Justin Jackson and Jon Buda from Transistor.fm for pointing me in the right direction. And huge thanks to Josh and Ben at Honeybadger for being so generous with their time and insights. They were super confident in their approach, and that confidence gave me the push I needed to commit to this solution.

I'm super stoked on this. Now excuse me while I go find more excuses to use ClickHouse.